- Taboola Blog

- Data Science

Most products don’t have the luxury of retaining users beyond the first session solely on their solid reputations, and in order to do so, we need to optimize for the best user experience possible.

Have you recently launched a cool new feature you spent so much time on? What data do you use to determine the impact of the feature? Take a look at this blog, this may be just for you!

Ted Lasso has some real-life lessons we can all take in. How is this possible? Take a look!

Why is it important to remove underperforming features to improve the product’s key metrics? Find out here.

We wanted to see if there was a way we could sync our Kubernetes NetworkPolicies dynamically with tools we already use, like Consul and Calico.

Read this article to learn more about what conversions are, how Taboola handle billions of daily events at scale, and how it all presents meaningful data to customers.

Kafka is an open-source distributed event streaming platform and something went wrong while working with it. Let’s see how it was investigated and resolved.

Find out the secrets to how Taboola deploys and manages the thousands of servers that bring you recommendations every day.

Optimize Data Center Health: Taboola employs LSTM Autoencoder for precise anomaly detection, enhancing system performance.

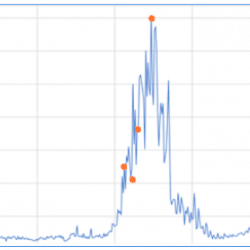

Taboola is responsible for billions of daily recommendations, and we are doing everything we can to make those recommendations fit each viewer’s personal taste and interests. We do so by updating our Deep-Learning based models, increasing our computational resources, improving our exploration techniques and many more. All those things though, have one thing in common – we need to understand if a change is for the better or not, and we need to do so while allowing many tests to run in parallel. We can think of many KPI’s for new algorithmic modifications – system latency, diversity of recommendations or user-interaction to name a few – but at the end of the day, the one metric that matters most for us in Taboola is RPM (revenue per mill, or revenue per 1,000 recommendations), which indicates how much money and value we create for our customers on both sides – the […]