- Taboola Blog

- Engineering

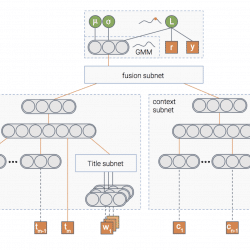

About a year ago we incorporated a new type of feature into one of our models used for recommending content items to our users. I’m talking about the thumbnail of the content item: Up until that point we used the item’s title and metadata features. The title is easier to work with compared to the thumbnail – machine learning wise. Our model has matured and it was time to add the thumbnail to the party. This decision was the first step towards a horrible bias introduced into our train-test split procedure. Let me unfold the story… Setting the scene From our experience it’s hard to incorporate multiple types of features into a unified model. So we decided to take baby steps, and add the thumbnail to a model that uses only one feature – the title. There’s one thing you need to take into account when working with these […]

Knowledge sharing is critical for every company that wants to grow and improve. The bigger the company – the harder it gets. Inefficiency, a lack of alignment within your peers, difficulty training new workers – you name it. In this post we will take a look at the existing methods for knowledge sharing. How they can’t keep up with growth and fast paced changes, and why people are your best resource for knowledge. What is Knowledge? In general, there are three main types of knowledge that need to be shared in a software company – Technical Knowledge, Product Knowledge and Business Knowledge. When a new employee begins their job, most companies will help them to learn, using some of the more traditional methods to share knowledge: Learn from others – via frontal training or assigning a mentor Allow Self learning – online course, or from the company’s knowledge center (Atlassian, […]

If you’ve been following our tech blog lately, you might have noticed we’re using a special type of neural networks called Mixture Density Network (MDN). MDNs do not only predict the expected value of a target, but also the underlying probability distribution. This blogpost will focus on how to implement such a model using Tensorflow, from the ground up, including explanations, diagrams and a Jupyter notebook with the entire source code. What are MDNs and why are they useful? Real life data is noisy. While quite irritating, that noise is meaningful, as it gives a wider perspective of the origins of the data. The target value can have different levels of noise depending on the input, and this can have a major impact on our understanding of the data. This is better explained with an example. Assume the following quadratic function: Given x as input, we have a deterministic output […]

As VP of IT at Taboola, my teams and I are overwhelmed with logs, pinned down by the rate and volume of them. The job of the Production Site Reliability Engineering (SRE) team in Taboola is to keep the technology running smoothly and bring in as many insights as we can from the system, making sure that any and every technical issue (that isn’t self healing or contained) is dealt with quickly. We also support this torrent of incoming data to make sure that any insights that can be gleaned from this data are found. With over 1B users discovering what’s interesting and new through the Taboola Feed, we can’t drop the ball or stop thinking about our logs, log management, where to store them and how to process them. This is the challenge of processing over one million lines of logs every second. To address this challenge, we engaged […]

The web is full of third-party scripts. Sites use them for ads, analytics, retargeting, and more. But this isn’t always the whole story. Scripts are unpredictable, they execute code, but you don’t know what this code actually does. With Taboola’s advertising video player solution, we struggle with 3rd party scripts daily. Working with different advertisers has exposed us to a variety of malicious behavior: sound violations, auto scroll and change page DOM are just some of them. In this post we’ll take a closer look at how we detect sound violations. Steer clear of 3rd party script risks A sound violation is a state in which the video plays sound without user interaction. Several advertisers do this, to ensure that the user will notice their ads. We struggled with this often and received lots of complaints from publishers. Sound violations should be prevented by the video player, but […]

So you just finished designing that great neural network architecture of yours. It has a blazing number of 300 fully connected layers interleaved with 200 convolutional layers with 20 channels each, where the result is fed as the seed of a glorious bidirectional stacked LSTM with a pinch of attention. After training you get an accuracy of 99.99%, and you’re ready to ship it to production. But then you realize the production constraints won’t allow you to run inference using this beast. You need the inference to be done in under 200 milliseconds. In other words, you need to chop off half of the layers, give up on using convolutions, and let’s not get started about the costly LSTM… If only you could make that amazing model faster! Sometimes you can Here at Taboola we did it. Well, not exactly… Let me explain. One of our models has to predict […]

Prioritizing Kafka Topic Consumption: How I Developed a Mechanism to Optimize Message Handling. Discover how to handle messages efficiently.

Imagine you’re walking down the street and you see a nice car you’re thinking of buying. Just by pointing your phone camera, you can see relevant content about that car. How cool is that?! That was our team’s idea that awarded us first place in the recent Taboola R&D hackathon aptly named – Taboola Zoom! Every year Taboola holds a global R&D hackathon for its 350+ engineers aimed at creating ideas for cool potential products or just some fun experiments in general. This year, 33 teams worked for 36 hours to come up with ideas that are both awesome and helpful to Taboola. Some of the highlights included a tool that can accurately predict the users’ gender based on their browsing activity and an integration to social networks for Taboola Feed. Our team decided to create an AR (Augmented Reality) application that allows a user to get content recommendations, […]

Introduction One of the key creative aspects of an advertisement is choosing the image that will appear alongside the advertisement text. The advertisers aim is to select an image that will draw the attention of the users and get them to click on the add, while remaining relevant to the advertisement text. Say that you’re an advertizer wanting to place a new ad titled “15 healthy dishes you must try”. There are endless possibilities of choosing the image thumbnail to go along with this title, clearly some more clickable than others. One can apply best practices in choosing the thumbnail, but manually searching for the best image (out of possibly thousands that fit this title) is time consuming and impractical. Moreover, there is no clear way of quantifying how much an image is related to a title and more importantly – how clickable the image is, compared to other […]

In the first post of the series we discussed three types of uncertainty that can affect your model – data uncertainty, model uncertainty and measurement uncertainty. In the second post we talked about various methods to handle the model uncertainty specifically . Then, in our third post we showed how we can use the model’s uncertainty to encourage exploration of new items in recommender systems. Wouldn’t it be great if we can handle all three types of uncertainty in a principled way using one unified model? In this post we’ll show you how we at Taboola implemented a neural network that estimates both the probability of an item being relevant to the user, as well as the uncertainty of this prediction. Let’s jump to the deep water A picture is worth a thousand words, isn’t it? And a picture containing a thousand neurons?… In any case, this is the […]