Introduction

One of the key creative aspects of an advertisement is choosing the image that will appear alongside the advertisement text. The advertisers aim is to select an image that will draw the attention of the users and get them to click on the add, while remaining relevant to the advertisement text.

Say that you’re an advertizer wanting to place a new ad titled “15 healthy dishes you must try”. There are endless possibilities of choosing the image thumbnail to go along with this title, clearly some more clickable than others. One can apply best practices in choosing the thumbnail, but manually searching for the best image (out of possibly thousands that fit this title) is time consuming and impractical. Moreover, there is no clear way of quantifying how much an image is related to a title and more importantly – how clickable the image is, compared to other options.

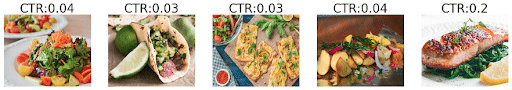

This is where our image search comes into play. We developed a text to image search algorithm that given a proposed title, scans an image gallery to find the most suitable images and estimates their expected click through rate. For example, here are the images returned by our algorithm for the query “15 healthy dishes you must try” along with their predicted Click Through Ratio (CTR):

Method

In a nutshell, our pipeline is composed of extracting title embeddings, extracting image embeddings and learning two transformations to map those embeddings to a joint space. We elaborate on each of these steps below.

Embeddings

First, let us consider the concept of embeddings. An embedding function is a function that receives an item (be it image or text) and produces a numerical vector for the item that captures its semantic meaning, such that if two items are semantically similar – then their vectors would be close. For example the vectors for the two titles “Kylie Jenner, 19, Buys Fourth California Mansion at $12M” and “18 Facts about Oprah and her Extravagant Lifestyle” should be similar, or close in the embedding space, while the vectors for the titles “New Countries that are surprisingly good at these sports” and “Forgetting things? You should read this!” should be very different, or far away from each other in the embedding space. Similarly, the vectors for following two images

should be close in the embedding space since their semantic meaning is the same (dogs making funny faces), even though the specific image values are vastly different.

At first glance, one might consider such an embedding function to be very explicit: for example, counting the number of faces in an image, the number of words in a sentence, perhaps creating a list of the objects in an image. Such explicit functions are in fact extremely hard to implement and in practice do not preserve semantics very well, at least not in a way which easily allows us to compare them. In practice embedding functions represent the information of the item in a much more implicit manner, as we will see next.

More concretely, let A ={a0,a1,…, ak } be the space of all items to embed. An embedding function f:ARd maps each item to a d-dimensional vector such that if two items ai , aj are semantically similar, then their embeddings distance L( f(ai), f(aj) ) should be small in some predefined distance metric L (usually standard euclidean distance).

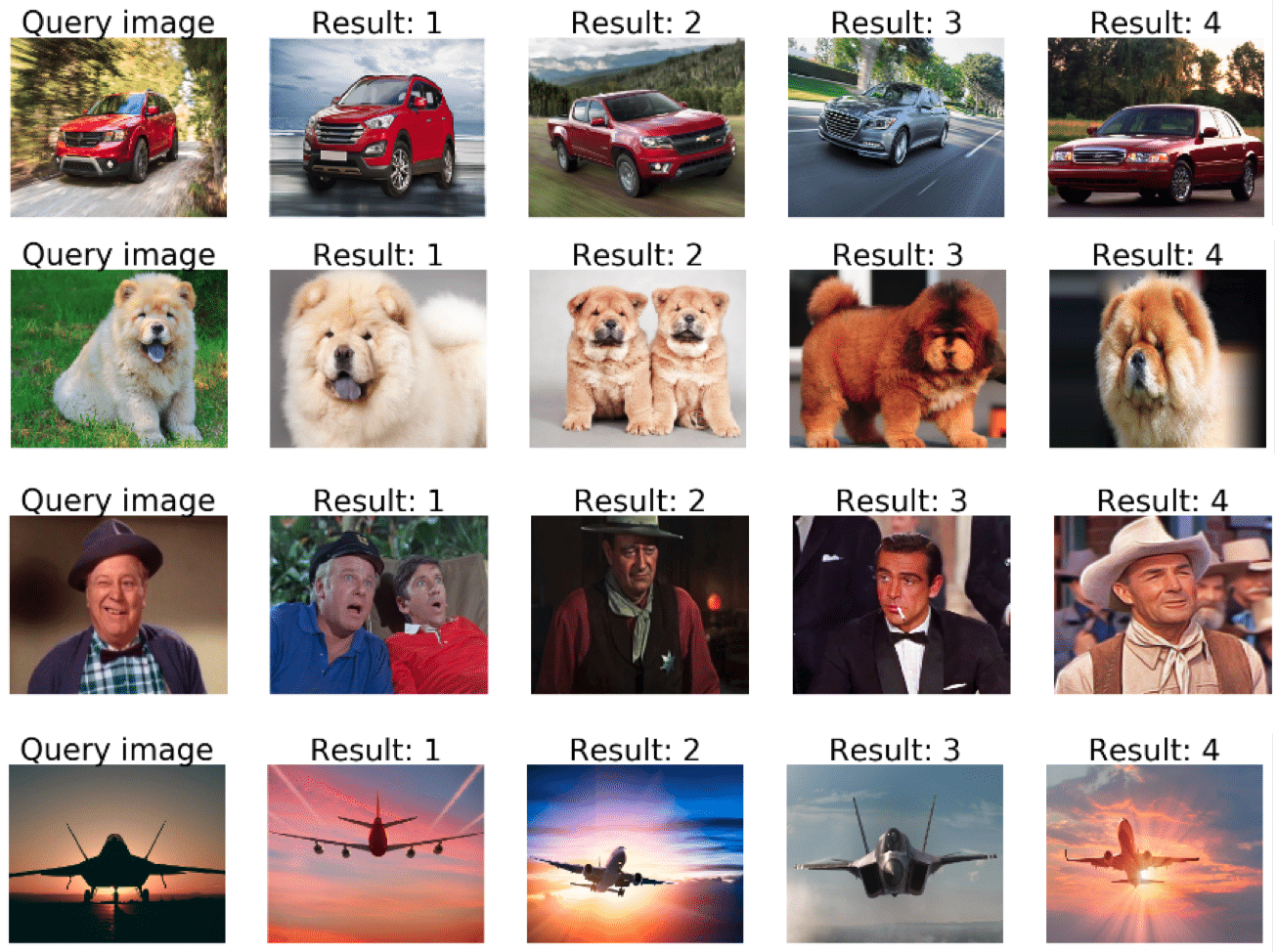

Image embedding

Pre-trained Convolutional Neural Networks have shown to be extremely useful in obtaining strong image representation [4]. In our framework, we use a ResNet 152 [5] model pretrained on the Imagenet Large Scale Recognition Challenge (ILSRC) dataset[6]. Each image is fed to the pretrained network and we take the activation of the res5c layer as a 2048 embedding vector. To better illustrate the quality of the embeddings, we implement a simple image search demo on our own Taboola image dataset. Here are the 4 most similar images to several image queries (the query is the leftmost image):

Title embedding

A number of sentence embeddings methods were proposed in the literature[8-10]. However, we found Infersent [11] to be particularly useful in our application. While common approaches to sentence representation are unsupervised or leverage the structure of the text for supervision, Infersent trains sentence embeddings in a fully supervised manner. To this end, [11] leverages the SNLI [12] dataset which composes of 570K pairs of sentences with a label of the relationship between them (contradiction, neutral or entailment). A number of different models were trained in [11] and a simple Max-pooling of a BiLSTM hidden states reached the best performance on a number of NLP tasks and benchmarks. Below are some examples to title queries and their nearest neighbors:

Query: “15 Adorable Puppy Fails The Internet Is Obsessed With”

NN1: 18 Puppy Fails That The Internet Is Obsessed With

NN2: 13 Adorable Puppy Fails That Will To Make You Smile

NN3: 16 Dog Photoshoots The Internet Is Absolutely Obsessed With

Query: 10 Negative Side Effects of Low Vitamin D Levels

NN 1: How to Steer Clear of Side Effects From Blood Thinners

NN 2: 7 Signs and Symptoms of Vitamin D Deficiency People Often Ignore

NN 3: 8 Facts About Vitamin D and Rheumatoid Arthritis

Matching titles and images

Given a query title, we would like to fit it with the most relevant images. For example, the query “20 new cars to buy in 2018” would return images of new and shiny high end cars. As mentioned before, our analysis of titles and images is not explicit, meaning that we do not explicitly detect the objects and named entities of the titles and match it with images containing the same objects. Instead, we rely on the title and image embeddings which implicitly capture the semantics of titles and images.

Next, we need to have a better grasp of what does it mean that a title corresponds to an image. To better define it, we built a large training set composed of pairs of <advertisement title, advertisement image> from advertisements that appeared in the Taboola widget. Given such data, we can define that a given title to corresponds to an images im0 if (and only if) a title t0’that is semantically similar to to appeared in an advertisement along with an image im0′ that is semantically similar to im0. In other words, we say that a title corresponds to an image (and vice versa) if they appeared in an advertisement together.

Given this definition, as a naive first solution we can apply the following pipeline: given a title to, find its embedding e0. Next find the most similar title embedding to it from our gallery, denoted by eo’ with corresponding title t0′. Finally, take as output the image that appeared in the original advertisement with t0′.

While this is a sound solution, it does not fully leverage our training set since it uses the information from only one <title, image> training pair. Indeed, our experiments showed that the images suggested in this manner were far less suitable than images that were suggested from an approach which uses the entire training gallery. In order to leverage the entire training set of titles and images, we would like to implicitly capture the semantic similarity of titles, images and the given correspondence between them in our training set. To this end, we employ an approach that learns a joint title-image space in which both the titles and the images reside. This allows us to directly measure distance between titles and images and in particular, given a query title, find its nearest neighbor image.

For example, the closest sentence to the query “30 Richest Actresses in America” is “The 30 Richest Canadians Ranked By Wealth” which was displayed along with the following images:

As reference, here are the results obtained by our method which is explained next:

Learning a joint space

Assume we have m pairs of titles with their corresponding images <t1,im1>,<t2,im2>,…,<tm,imm>. For simplicity of notation, let’s assume their embeddings are already denoted as <ti,imi>. We denote the joint title-image space by V. We would like to find two mappings Ft:{ti}V from the space of title embeddings to the joint space V and Fim:{imi}V from the space of image embeddings to the same joint space, such that the distance between Ft (ti) and Fim (imi) in the joint space V would be small.

Numerous methods for obtaining such mappings exist [13-16, see 19 for an extensive survey]. However, we found a simple statistical method to be useful in our application. To this end, we employ Canonical Correlation Analysis (CCA) [17].

Let T=[t1,t2,…,tm] be the set of title embeddings and M =[im1,im2,…,imm] be the set of image embeddings. CCA learns linear projections Fim and Ft such that the correlation between the set of title embeddings and image embeddings in the joint space is maximized. Mathematically, CCA finds Fim and Ft such that = corr(FtT,FimM) is maximized, where the last equation is solved by formulating it as an eigenvalue problem. Note that in our implementation Fim and Ft are linear projections. This can be further improved learning non-linear[20] or even deep [21] transformations and is left for future work.

Click Through Rate estimation

Now, given a proposed image, we would like to estimate it’s Click Through Rate (CTR) – the amount of clicks divided by the number of appearances. Many of the images in our gallery already appeared in campaigns, so we can use their historic data. For others, we train a simple linear Support Vector Machine regression model [18] based on their image embeddings.

Full image search pipeline

Our final image search pipeline works as follows: First, we take a large gallery of titles and images, run the ResNet 152 model and the InferSent model to obtain image and title embeddings. Next, we apply CCA to learn two linear projections to a joint space and multiply the image embeddings by the learned image projection to map them to a joint space. All those steps are run offline.

Now, given a new title text by the advertizer, we run the predefined Infersent model to obtain its embedding. Then, the embedding is multiplied by the learned sentence projection to map it to the joint title-image space. Finally, we return the 10 closest images in the space along with their predicted ctr.

Examples:

Now for the fun part. Here are some quantitative results of our pipeline using real titles that appeared in some of our recommendations. For each title, we present the top 8 images returned by our system with their predicted click through ratio.

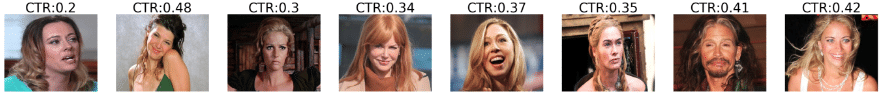

Title: “30 Richest Actresses in America”

Images returned by our system:

Notice this is the same title we discussed earlier when comparing our approach with the naive solution. Here we also show the predicted CTR. We assume our model mistook Steven Tyler’s for a female actress due to his long hair…

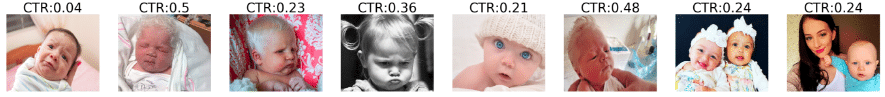

Title: “Baby Born With White Hair Stumps Doctors”.

Images returned by our system:

Note that some of the rightmost images are a bit off since they are farther away from the title embedding in the joint space.

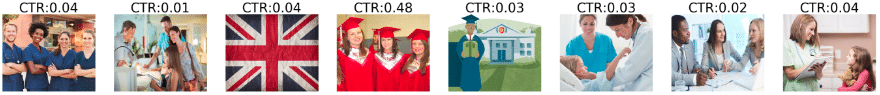

Our algorithm also works for longer titles: “Now You Can Turn Your Passion For Helping Others Into A Career – Become A Nurse 100% Online. Find Enrollment & Scholarships Compare Schedules Now!”

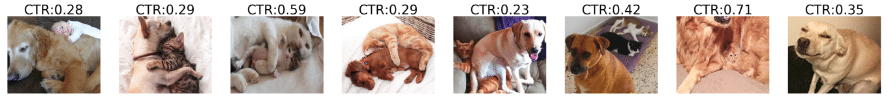

Title: “20 Ridiculously Adorable Pet Photos That Went Viral”

Images returned by our system:

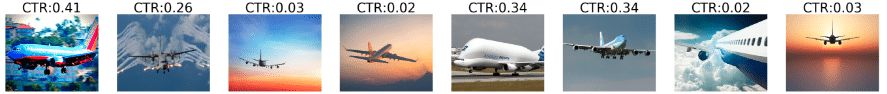

Title: “Don’t Miss this Incredible Offer if You Fly with Delta Ends 11/8!”

Images returned by our system (notice the leftmost image):

Wrapping up

Our goal in this post was to illustrate our system for solving a real world difficulty of image search using free text, specifically to be used by advertisers to optimize which image to use for a given add.

While we have developed the system for solving our needs, it is not tailored in any way to advertising and can be used for implementing image search in other domains and industries. In fact, the concepts described above are even more general (e.g. embeddings) and can be useful for a variety of applications.

In its current implementation, the system returns the 10 most similar images for a given query without any other considerations. In future work we intend to add an option of returning only images with high ctr and provide the user (i.e.: advertizer) with an option to control the importance of visual similarity in the returned images relative to their ctr (e.g.: return images that might less fit the semantics of the title but have higher ctr). We also intend to add functionality of controlling how versatile the returned images will be relative to their semantic similarity with the title (e.g.: return a set of images which might fit the title less well, but are versatile and provide the advertiser with more options).

References

[1] Dalal, Navneet, and Bill Triggs. “Histograms of oriented gradients for human detection.” Computer Vision and Pattern Recognition, 2005. CVPR 2005. IEEE Computer Society Conference on. Vol. 1. IEEE, 2005.

[2] Ojala, Timo, Matti Pietikäinen, and David Harwood. “A comparative study of texture measures with classification based on featured distributions.” Pattern recognition 29.1 (1996): 51-59.

[3] Csurka, Gabriella, et al. “Visual categorization with bags of keypoints.” Workshop on statistical learning in computer vision, ECCV. Vol. 1. No. 1-22. 2004.

[4] Razavian, Ali Sharif, et al. “CNN features off-the-shelf: an astounding baseline for recognition.” Computer Vision and Pattern Recognition Workshops (CVPRW), 2014 IEEE Conference on. IEEE, 2014.

[5] He, Kaiming, et al. “Deep residual learning for image recognition.” Proceedings of the IEEE conference on computer vision and pattern recognition. 2016.

[6] Russakovsky, Olga, et al. “Imagenet large scale visual recognition challenge.” International Journal of Computer Vision 115.3 (2015): 211-252.

[7] Maaten, Laurens van der, and Geoffrey Hinton. “Visualizing data using t-SNE.” Journal of machine learning research 9.Nov (2008): 2579-2605.

[8] Le, Quoc, and Tomas Mikolov. “Distributed representations of sentences and documents.” International Conference on Machine Learning. 2014.

[9] Kiros, Ryan, et al. “Skip-thought vectors.” Advances in neural information processing systems. 2015.

[10] Hill, Felix, Kyunghyun Cho, and Anna Korhonen. “Learning Distributed Representations of Sentences from Unlabelled Data.” Proceedings of NAACL-HLT. 2016.

[11] Conneau, Alexis, et al. “Supervised Learning of Universal Sentence Representations from Natural Language Inference Data.” Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing. 2017.

[12] Bowman, Samuel R., et al. “A large annotated corpus for learning natural language inference.” arXiv preprint arXiv:1508.05326 (2015).

[13] Chandar, Sarath, et al. “Correlational neural networks.” Neural computation 28.2 (2016): 257-285.

[14] Wang, Weiran, et al. “On deep multi-view representation learning.” International Conference on Machine Learning. 2015.

[15] Yan, Fei, and Krystian Mikolajczyk. “Deep correlation for matching images and text.” Computer Vision and Pattern Recognition (CVPR), 2015 IEEE Conference on. IEEE, 2015.

[16] Eisenschtat, Aviv, and Lior Wolf. “Linking Image and Text With 2-Way Nets.” Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2017.

[17] Vinod, Hrishikesh D. “Canonical ridge and econometrics of joint production.” Journal of econometrics 4.2 (1976): 147-166.

[18] Cortes, Corinna, and Vladimir Vapnik. “Support-vector networks.” Machine learning 20.3 (1995): 273-297.

[19] Li, Yingming, Ming Yang, and Zhongfei Zhang. “Multi-view representation learning: A survey from shallow methods to deep methods.” arXiv preprint arXiv:1610.01206 (2016).

[20 ] Lai, Pei Ling, and Colin Fyfe. “Kernel and nonlinear canonical correlation analysis.” International Journal of Neural Systems 10.05 (2000): 365-377.

[21] Andrew, Galen, et al. “Deep canonical correlation analysis.” International Conference on Machine Learning. 2013.