All Stories

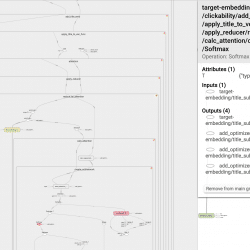

How to structure your TensorFlow graph like a software engineer So you’ve finished training your model, and it’s time to get some insights as to what it has learned. You decide which tensor should be interesting, and go look for it in your code – to find out what its name is. Then it hits you – you forgot to give it a name. You also forgot to wrap the logical code block with a named scope. It means you’ll have a hard time getting a reference to the tensor. It holds for python scripts as well as TensorBoard: Can you see that small red circle lost in the sea of tensors? Finding it is hard… That’s a bummer! It would have been much better if it looked more like this: That’s more like it! Each set of tensors which form a logical unit is wrapped inside […]

Some of the problems we tackle using machine learning involve categorical features that represent real world objects, such as words, items and categories. So what happens when at inference time we get new object values that have never been seen before? How can we prepare ourselves in advance so we can still make sense out of the input? Unseen values, also called OOV (Out of Vocabulary) values, must be handled properly. Different algorithms have different methods to deal with OOV values. Different assumptions on the categorical features should be treated differently as well. In this post, I’ll focus on the case of deep learning applied to dynamic data, where new values appear all the time. I’ll use Taboola’s recommender system as an example. Some of the inputs the model gets at inference time contain unseen values – this is common in recommender systems. Examples include: Item id: each recommendable item gets […]

About a year ago we incorporated a new type of feature into one of our models used for recommending content items to our users. I’m talking about the thumbnail of the content item: Up until that point we used the item’s title and metadata features. The title is easier to work with compared to the thumbnail – machine learning wise. Our model has matured and it was time to add the thumbnail to the party. This decision was the first step towards a horrible bias introduced into our train-test split procedure. Let me unfold the story… Setting the scene From our experience it’s hard to incorporate multiple types of features into a unified model. So we decided to take baby steps, and add the thumbnail to a model that uses only one feature – the title. There’s one thing you need to take into account when working with these […]

So you just finished designing that great neural network architecture of yours. It has a blazing number of 300 fully connected layers interleaved with 200 convolutional layers with 20 channels each, where the result is fed as the seed of a glorious bidirectional stacked LSTM with a pinch of attention. After training you get an accuracy of 99.99%, and you’re ready to ship it to production. But then you realize the production constraints won’t allow you to run inference using this beast. You need the inference to be done in under 200 milliseconds. In other words, you need to chop off half of the layers, give up on using convolutions, and let’s not get started about the costly LSTM… If only you could make that amazing model faster! Sometimes you can Here at Taboola we did it. Well, not exactly… Let me explain. One of our models has to predict […]

Now that more than a year has passed since our first deep learning project emerged, we have had to keep moving forward and delivering the best models we can. Doing so has involved a lot of research, trying out different models, from as simple as bag-of-words, LSTM and CNN, to the more advanced attention, MDN and multi-task learning. Even the simplest model we tried has many hyperparameters, and tuning these might be even more important than the actual architecture we ended up using – in terms of the model’s accuracy. Although there’s a lot of active research in the field of hyperparameter tuning (see 1, 2, 3), implementing this tuning process has evaded the spotlight. If you go around and ask people how they tune their models, their most likely answer will be “just write a script that does it for you”. Well, that’s easier said than done… Apparently, there […]