All Stories

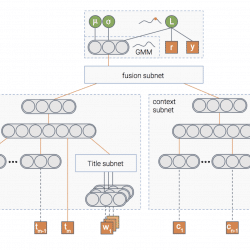

In the first post of the series we discussed three types of uncertainty that can affect your model – data uncertainty, model uncertainty and measurement uncertainty. In the second post we talked about various methods to handle the model uncertainty specifically . Then, in our third post we showed how we can use the model’s uncertainty to encourage exploration of new items in recommender systems. Wouldn’t it be great if we can handle all three types of uncertainty in a principled way using one unified model? In this post we’ll show you how we at Taboola implemented a neural network that estimates both the probability of an item being relevant to the user, as well as the uncertainty of this prediction. Let’s jump to the deep water A picture is worth a thousand words, isn’t it? And a picture containing a thousand neurons?… In any case, this is the […]

Now that we know what uncertainty types exist and learned some ways to model them, we can start talking about how to use them in our application. In this post we’ll introduce the exploration-exploitation problem and show you how uncertainty can help in solving it. We’ll focus on exploration in recommender systems, but the same idea can be applied in many applications of reinforcement learning – self driving cars, robots, etc. Problem Setting The goal of a recommender system is to recommend items that the users might find relevant. At Taboola, relevance is expressed via a click: we show a widget containing content recommendations, and the users choose if they want to click on one of the items. The probability of the user clicking on an item is called Click Through Rate (CTR). If we knew the CTR of all the items, the problem of which items to recommend […]

Understanding what a model doesn’t know is important both from the practitioner’s perspective and for the end users of many different machine learning applications. In our previous blog post we discussed the different types of uncertainty. We explained how we can use it to interpret and debug our models. In this post we’ll discuss different ways to obtain uncertainty in Deep Neural Networks. Let’s start by looking at neural networks from a Bayesian perspective. Bayesian learning 101 Bayesian statistics allow us to draw conclusions based on both evidence (data) and our prior knowledge about the world. This is often contrasted with frequentist statistics which only consider evidence. The prior knowledge captures our belief on which model generated the data, or what the weights of that model are. We can represent this belief using a prior distribution p(w) over the model’s weights. As we collect more data we update the […]

As deep neural networks (DNN) become more powerful, their complexity increases. This complexity introduces new challenges, including model interpretability. Interpretability is crucial in order to build models that are more robust and resistant to adversarial attacks. Moreover, designing a model for a new, not well researched domain is challenging and being able to interpret what the model is doing can help us in the process. The importance of model interpretation has driven researchers to develop a variety of methods over the past few years and an entire workshop was dedicated to this subject at the NIPS conference last year. These methods include: LIME: a method to explain a model’s prediction via local linear approximation Activation Maximization: a method for understanding which input patterns produce maximal model response Feature Visualizations Embedding a DNN’s layer into a low dimensional explanation space Employing methods from cognitive psychology Uncertainty estimation methods – the focus of […]