Delivering good product to live environment requires big effort from R&D. Under the software development life cycle, we can find 6 basic phases: Understanding the requirements, design, coding, testing, deployment (incl. A/B test, if necessary) and maintenance. But how can we measure product quality? By its stability? Scalability? Easy to maintain? Bug free code?

There are probably many definitions for what is a good product, but in my opinion, the two foundation stones are product behavior & functionality as defined (be aligned with the product manager’s requirements), and zero critical bugs. The product can serve many goals, but if it doesn’t achieve the main one, it might not have a reason to exist. Naturally, customers are always expecting high quality from the product, so before releasing it to production QA should make sure that indeed critical bugs don’t exist.

In order to respect these two, both R&D and QA should be fully committed here. From QA perspective, things can get complicated while running end to end tests which involve UI/UX. Why is that?

Cross browser testing – 9 different environments

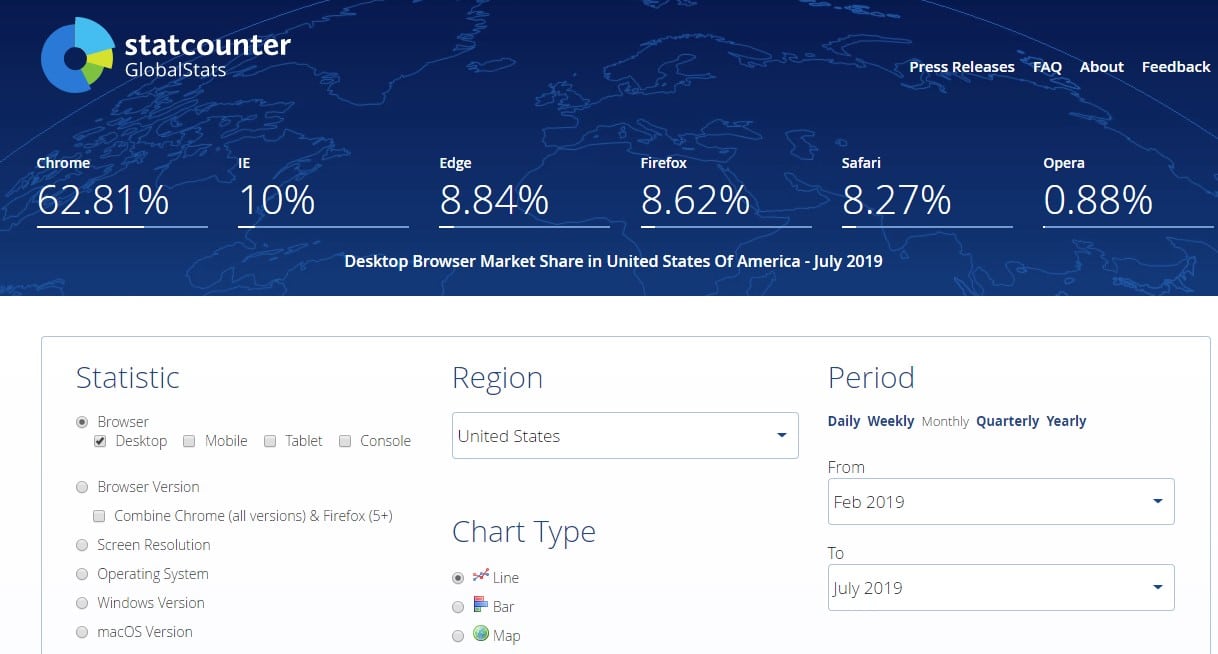

Everyone who’s involved with the product, should ask themselves what’s the product’s target audience. In other words, different geographical locations dictate different browser usage. For example, if I would like to approach a U.S. desktop audience, I would probably start my test coverage with Chrome, Edge, Firefox & Internet Explorer. Below is a glimpse of data taken from Statcounter:

But of course, the product should run under additional browsers, that should also be supported. unless the product meant to run only on one browser. In this case, for desktop environment, QA should cover Chrome (PC & Mac), Edge, Internet Explorer, Safari & Firefox. As for mobile, we should cover Chrome in Android and Safari & Chrome on iOS.

Configure your tests

Every new product, or even a feature, holds different configurations. So, in addition to a sanity check, we should also do regression tests which should be focused on new functionality under the different configurations.

A simple feature can be composed of many parameters that can get different values representing different states of the product.

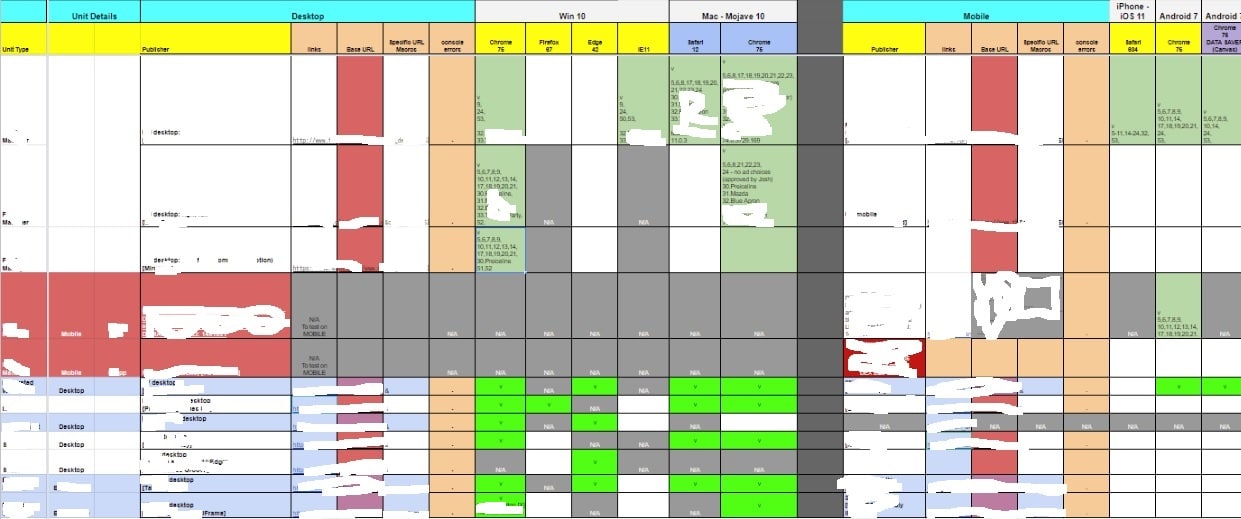

When examining the test coverage, we encounter a 3-dimensional matrix which consist of Browser X Configurations X test cases. Below is an example of this matrix.

Looking at the matrix for a feature that we have tested a while ago, we can find for instance, 9 Browsers X 3 Configurations X 53 test cases, which adds up to ~ 1500 tests.

Besides running the matrix, there are many action items that should be taken from the QA side before actually starting to run the tests. Learning from past failures & successes during our QA cycles, we’ve started to adapt a new approach for managing our tests.

In a perfect world, QA should run all scenarios under all configurations & environments in order to give a green light to go to production. Obviously, this isn’t the case. Being the last wheel before production, QA should find the optimal cross section between quality and time consumption, which involves taking risks.

QA Kickoff

In order to save time and be as ready as we possibly can, before the feature is ready for testing, QA can start creating the test matrix according to the specs containing the relevant test cases.

A good tip here will be doing a test review with another QA engineer in order to get feedback and have an open discussion about the matrix – changing, adding or removing test cases. Actually, it is very similar to the code review which is done by dev. After doing so, it’s recommended to do it also with the relevant PM & dev in order to set expectations – what is tested and where, while managing the risk & giving time estimations.

Naturally, when dev finishes their part, the next step is to start running the test matrix. From my experience, there are some necessary steps which should be done beforehand. It’s recommended that QA will do a handoff with dev in order to understand what was developed, which configurations / parameters should be used, how should it look like, and most importantly – ask questions regarding the behavior, environments, list of known issues, etc…

Before moving the feature to QA, dev are usually running some tests from their side, to make sure that the feature is not broken. Many times, dev creating its own environment (mockups, tools, setup, test pages) for testing, and afterwards QA also use it. This can be harmful in 2 ways: QA misunderstands the process of building this setup, and so, you are staying in your comfort zone, not thinking about other setups. Doing that, you are missing potential bugs. It’s always preferable that QA will build its own environment and use it regardless of dev’s environment. Last but not least, it’s always useful to go over old test docs & old bugs that are related to the same area you are testing, in order to verify different issues that weren’t in the scope.

Hands on

Are we ready to start with the matrix already? It depends.

You can rush into covering the matrix, but the risk here is catching bugs in a late stage which can delay the deployment to production.

A couple of things that can help here: Create 1 basic “happy flow” that tests the feature straight forward (without any special cases) and run it across all environments (OSs). This is the very basic sanity to check if the feature is working and not breaking anything else. In addition, I would run with the feature only on Chrome (most commonly used browser today) and do some freestyle testing, changing some flags and verify that the behavior is expected.

In a couple of hours, performing these 2 steps, gives QA a basic look & feel of the feature, some confidence about its stability & could also brings new ideas for additional test cases.

If no blocker bug has been found during the last session, QA can proceed with the matrix. According to deadlines, it’s a common decision whether to check the whole matrix or to cover it partially. On very important projects, we tend to cover it all, without exceeding the reasonable timelines. While most of the projects are not considered as super important, our plan is to verify all test cases on Chrome. As for the other browsers, it’s your decision which boxes should be covered in the test matrix and which tests you are going to run – resulting in a combination of randomness with some intentional decision.

Learning from past mistakes, encouraged us to build new procedures within our QA process and gave us more confidence in releasing stable & working products.