As a content discovery product, we need to be able to pace the campaign through its life on real time – spending the budget entirely without overspending.

The team I am leading is responsible for serving Taboola’s video content. Our main goal is to enable growth of our business. Owning the entire serving process of the video content can be crucial to this end. Depending on 3rd parties serving systems with the core process would leave us vulnerable to rising prices, compromised features, serving latency, reduced performance and so on.

In order to serve the video on our own we had to come up with a way to pace the amount of times we want to display the video and prevent instances in which the entire budget of the video would drain in a few seconds – common scenario in Taboola’s scale when the video is not targeted aggressively. We also needed to support partners that want to display the video for a very short time (targeting sport events, for example), so we needed to develop real-time mechanism as well.

Thankfully, our solution was ready just as we had to stop working with our 3rd party serving system, which was responsible for serving and budget pacing, due to business needs.

Pacing video campaigns

We started by meeting with a few talented developers and began throwing ideas and metaphors, from banking mechanism to toilet niagara.

The straightforward solution would be to calculate the amount of impressions out of the total budget and then distribute it per server across the campaign life time. Due to our scale, we have about 100 servers (only within the video group) and growing, all deployed in ci-cd process, which makes the actual number of serving machines to change all the time. Understanding how many servers are requesting budget would be hard to handle.

Moreover, this solution would behave as serving bursts, so we decided the pacing would be managed by throttling each campaign serving.

Understanding the terminology

Throttle is a value between 0-1, which represent the probability of a campaign to be displayed on each video request.

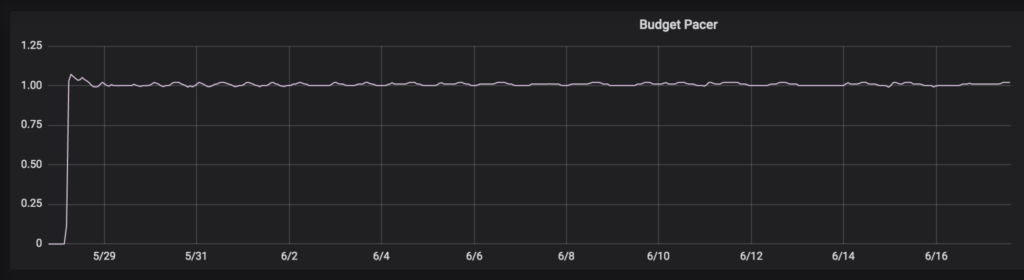

The pace is the time percentage that had passed from the total life-time of the campaign divided by the budget percentage that was spent. When the pace is equal to 1, the spending is evenly paced.

To reach pace = 1, we update the throttle by multiplying each iteration by the inverse pace. As a result, we achieved the wanted behaviour of paces < 1 to increase throttle and vice versa.

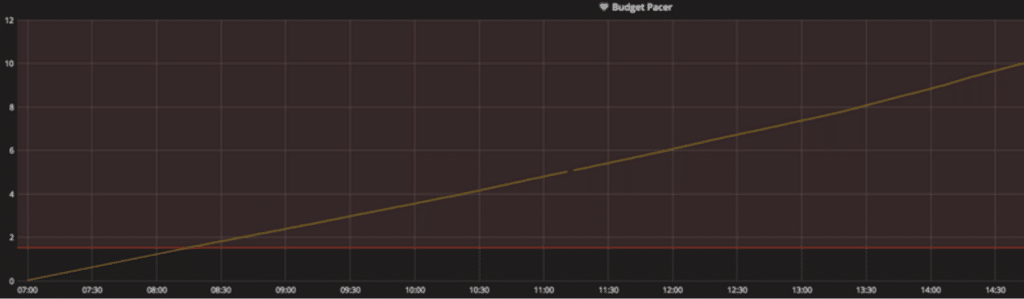

*Evenly paced campaign – linear spent graph over time

*Pace = %Budget / %Time

*Evenly paced spending should always be on pace = 1

Data pipeline

Using this pacing technique requires us to have real time capabilities.

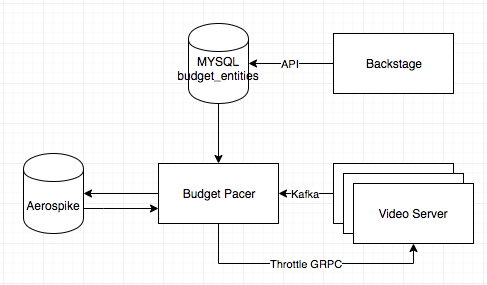

Our data pipeline uses Kafka, so having real time properties would simply require having Kafka consumer in the process.

In order to have stateless mechanism we chose Aerospike for our nosql, because it excels in low latency batch reads of small records, just as we needed in the software. It also enables sharding out of the box to support scaling.

The throttle values for all the paced campaigns are being requested from the video servers every few seconds. The request is made by a separate thread and stored in a local cache using GRPC.

Every video campaign request generates a random number – if the number is lower than the throttle in the cache, the campaign is being served. If the number is higher, the campaign is being filtered.

Architecture

Overall, we wanted to build a robust, scalable and reusable program which will be decoupled from the rest of the server. We expected it to run in real time, and be agnostic to the amount of servers uses the program.

Sound plan, but will it work? We wanted to reduce time wasted on developing, so we started by exploring and simulating the entire flow – thread pool of server objects requested the throttle and reported back the amount of budget they used. The throttle was recalculated to adjust the pace and the server objects used the new value and so on, during the configured life time and with the exact wanted budget.

Time for the real world

We wired Backstage,Taboola’s campaign management dashboard, for video campaign creation through an API. This way each campaign will have budget entities value – each one represents an entity we need to pace. RX Java based consumer was extended from an infrastructure we have in video server, listed to a topic containing revenue data and aggregates the campaigns with the relevant dates. We then write the aggregation into Aerospike.

Throttle calculation

Each campaign starts with a small throttle value of 10-6 and every calculate iteration (10 seconds) the value is increased by 20% until spent appears in the Aerospike record. Then we apply the formula T = T*1/P. During this process we constantly check the delta of the pace values. When the delta is smaller than a given threshold, we save this value. When the pace crosses value of 1, we apply it and keeping it on the right pace.

All the calculated values are being stored in Aerospike and local map, which is being requested by the video servers using GRPC.

We reduce the load of consuming by having two Budget Pacers. They also balance the load of the video servers requesting throttles, but only one Budget Pacer is allowed to calculate and write throttles into Aerospike – Zookeeper decides which Budget Pacer is the master, the slave allowed only to read the throttles and expose them through GRPC.

All our data is stored in two data centers for full data recovery. Enabling this capability in Budget Pacer, we have instance on both data centers, each having its own separate Kafka consumer and Aerospike.

Console directs the server calls to the master DC and balance the load between the two Budget Pacers within the DC.

Happy budget pacing

During the last quarter the activity has more than doubled the amount of campaigns and budget it serves.

Campaign can reach full serving capabilities within minutes from creation.

The infrastructure proved itself so much, that we decided on using it for two new features – Throttling RTB calls based on their performance and calculating pricing of one of our business models.