So, the firefighters are in your data center, there is no electricity, and the pager is more like a DDoS attack on your phone than anything informative. You look at your watch, multiple thoughts running through your head. Why me? Why now? What was the last DR test result? How do you pull the team out and through this IT catastrophe and survive to write about it? This is my story, my personal fight with the IT “Murphy laws” and how we can all benefit from it.

It was a Friday, one you know you need to be extra careful with. It’s always the end of the work week or smack in the middle of the night. (No IT catastrophe ever happens when it’s convenient to you, now does it? They always cluster and bunch around the most difficult times.) Anyway, it’s the end of the day Friday and multiple systems just go dark. You get that specific ringtone you configured for PagerDuty and it just will not stop playing. You get “The feeling” (with a capital T). You know you are connecting into a bad situation. Oh, and it’s already in the middle of your BCP (as it’s Covid-19 time) and things are hard all over.

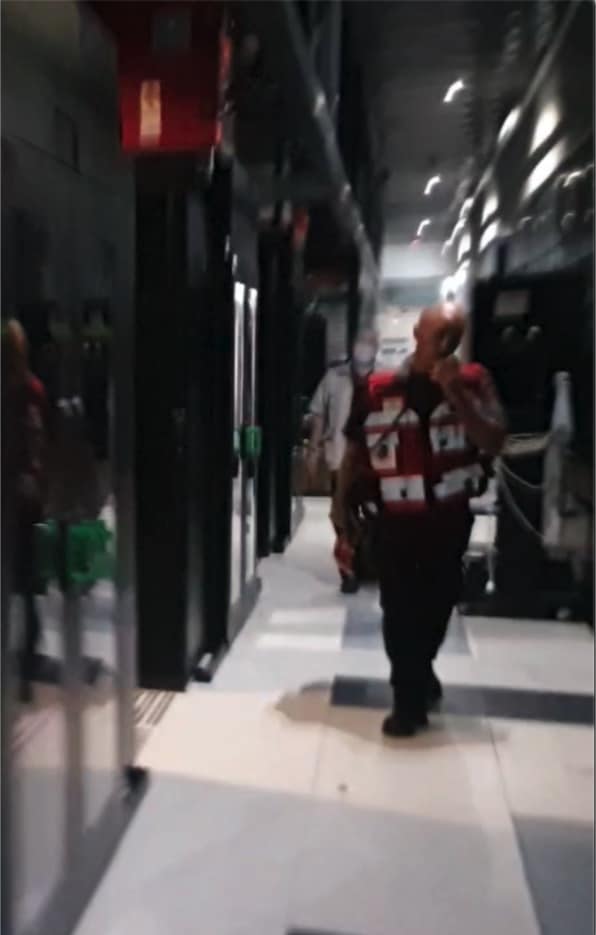

Connecting to the VPN (sigh of relief, the dual VPN solution in 2 different data centers is working) and the basic dashboards show that the front-end services are operational, yet the war room procedure was triggered. That means that the monitoring set in place for a large-scale downtime event was matched. While you’re connecting to the Zoom call, you get the following message in the #Slack channel for production incidents – “There is no power in the backend data center”. Now everything starts falling in place, the multiple alerts, the services that are down and all the possible bad things that are yet to come. Wait, one more thing pulls your attention. It’s the data center logistics group direct messaging on the phone. Now, you already know there isn’t power, so this message is now a higher priority than others and you open that thread: there is some text and a picture. Skipping the text you open the picture and you see that firefighters are in the data center. Many things go through your mind, but the one that is high up there – at least they don’t have hoses and you don’t see water anywhere in the picture.

Crisis Manager

First things first, I’m the crisis manager, so getting information and prioritizing actions is my job. I would say something like “Aviate, Navigate, Communicate”, only for IT/SRE, but I haven’t found the magic acronym that works for me. So what’s important when managing a large scale IT incident that can develop into a full out business downtime?:

- What services are impacted?

- What is the reason for the service interruption?

- What is the business impact of each service interruption? (for prioritizing the next steps)

- Do we have a plan to fix it (each interruption you identify)?

- How do we best communicate to the organization (and clients if need be) what we know and what to expect?

First things first

Now our top priority is safety and human life. That might sound pretentious as we are a software company and we do not manage life support systems, but we do run data centers and these are high energy locations with fire hazards and fire suppression systems that are not human friendly. So, once it’s clear that everyone is safe and the data center staff is not at risk, our next priority is to bring the business impacting services back online. Be it via the DR plan or just brute forcing through the challenges, it’s clear that there is a lot of work ahead of us. From making sure we have task forces working on the right problems (that’s two issues to manage right there, assembling the task force & prioritizing the tasks) to making sure the situational awareness is as complete as possible. When managing a large-scale crisis with multiple engineers from different reporting structures helping in, it’s easy to get lost.

Before diving into the game plan and this specific incident, it’s important to understand the rule of the crisis manager. What does this person do and why do we need this hands-off the keyboard person in the mix? Engineers are more than capable of solving problems, complex problems, especially if they were part of the team writing the code. Over the past years, with the DevOps movement it has become more and more acceptable to have coders on call and not only the SRE team. Something along the lines of “you built it, it’s your responsibility to keep it operational”. The engineers (and specifically the coders among them) are now also part of the team assuring the services are up and running. Having people from different backgrounds, different teams and different approaches to problem solving is a great thing! It’s also a challenge. Solving problems in production is very different than working on a project. The time pressure, the SLA needs, communications to the business and different groups working to resolve the incident are now also factors in getting things done. This is why you want to have a crisis manager. Once you cross some scale threshold (each organization needs to set that threshold) you want to have that position well defined, to help steer the work effort and bring the problem to a speedy resolution.

IT problems are nothing new. Even the term “bugs” traces way back to Rear admiral Grace Hopper from the 50s, and to Apollo missions and other places over 50 years ago. With the rise of ecommerce and internet services the realm of service management moved from the work of the few to a business need that is now touching almost every business. Finding frameworks or methodologies of solving IT incidents is well documented under the ITSM, ITIL and other standards. One that I found easy to use for the uninitiated is here (thanks Atlassian for publishing). In our IT organization, the rule of incident managers is not set, but defined in the moment based on the availability of the personnel on call. That said, we do have a short list of people on-call that can act in this capacity.

Solving the problem

Back to our incident, so the firefighters are on site, it’s already clear we don’t have power. The list of impacted services is mostly well defined (I write mostly, as services that failed over to DR might not be keeping up over time). We already have a Zoom war room open and active and my first move as incident manager is to type messages in the relevant #Slack channel. This choice is driven by two factors, the need to communicate as far as possible in the organization and the need to pull in additional people even if they are not on call (aka – all hands on deck). Next we need to define priorities according to business needs. This is very specific to each business, so I will share in broad strokes that the needs come from SLAs, cost, on-line and offline processing. So, while the billing system or backup systems are super important, and might take longer to restore the longer we wait, they don’t get higher priority over client facing systems that directly impact the business SLAs or client operations.

What’s the next step? Setting the task force teams onto the prioritized tasks and understanding if there are shared resources between them, that is, if any task force needs a resource that is in use by another (this can definitely be people, as network skills are in need or the data center logistics team is called on for physical actions). While working on the the task force resource allocations, I make sure to document it all in the #Slack channels, so it’s clear to anyone in the organization, who is working on what. The main idea here is that each task force needs to have some interaction with the business to make sure the situational awareness is accurate. While the teams get into action (preferably in other Zoom calls), one person from each task force acts as the liaison to the main war room Zoom. This is important as it helps with feedback and again that so important situational awareness.

While we are finishing our DR run and bringing services back up, the power is restored in the data center and we now have new avenues of action open to us. Do we leave the DR site as the main site for the weekend or roll back to the main site? The optimal situation would be to just set up the main site as the new DR and make sure the systems are back online and operational. On top of that make sure that the lower priority services are back online.

Working through a crisis

There are a lot of details, a lot of work and some of this is just grit. Making sure that the long hours in Zoom, asking people what can and should be done isn’t overlooked. Making sure it’s all documented and while people are talking you are typing feverishly on your keyboard to let others know what’s going on. Yet it does boil down to grit. The ability to continue on while the path to service availability isn’t always clear. Yes, you should always have a plan, a DR, a culture of chaos engineering, the ability to fail partially and not have all the service come crashing down when something isn’t working. However this isn’t always the case. And then there is Murphy: any good plan or well-documented system will always have some new and unexplored ways of failing. It’s at that point where you need to make sure the language of managing IT incidents is clear. That the vocabulary is in place to talk about business impacts, time to resolve, task forces, communication liaison and meticulous documentation. If it doesn’t help you solve the issue faster, it will absolutely help you learn how to do so for the next time. There is always the next time.

That next time is something you need to make sure you prepare for. When we have suffered an IT crisis, especially if it was “man made” (as most of them are), it’s important to learn from this mistake. In a quote attributed to Winston Churchill “Never let a good crisis go to waste” you can find the embodiment of the idea behind running a post mortem. That is, if you already persevered, suffered the hardship and fixed something, use that to improve. Otherwise, you are compounding the loss from the crisis. Not only do you suffer the loss of revenue or customer trust, but you also missed a learning opportunity and increased the chances of the same problem happening again.

Our data center didn’t burn down. Faulty wiring in our fire suppression system triggered the fire alarm that in turn cut power and blasted the “offending” electrical circuit with a suppression agent. This in turn called the firefighters and defined the data center blackout duration (firefighters on site, making sure air quality is back to normal, understanding that the fire suppression backup system is up to the task for return to normal operations). I can only imagine what my reaction was when I saw the picture of the firefighters, my focus on incident management was so intense, I just remember the world slowing down for me to get all the task force teams lined up and the feeling of crazy rush of adrenaline coursing through us all in an attempt to keep all business critical systems up and running.