The Dark Ages

We initially adopted oVirt as our virtualization platform, and it proved to be a good product with several notable advantages. Its open-source nature allowed us to leverage a wide range of features and customization options.

However, despite its strengths, we encountered several downsides and problems that compelled us to seek a better virtualization solution. Two major drawbacks were that it didn’t have any working DFS and inventory management issues. Additionally, we faced occasional performance issues and stability concerns with oVirt. Some resource-intensive workloads experienced latency or unexpected behavior, impacting the overall performance of our virtualized environment. These problems became more pronounced as our infrastructure grew, leading to a decrease in productivity and user satisfaction.

As you understand, we needed to give a better solution to our R&D teams and an easier/better product for us to manage and maintain.

(Source)

KubeVirt to the rescue

KubeVirt is an open-source virtualization solution that enables running virtual machines (VMs) on top of Kubernetes clusters. It allows users to leverage the benefits of both containers and VMs, making it easier to manage both traditional and cloud-native workloads in a single platform.

KubeVirt uses a custom resource definition (CRD) to define VMs as Kubernetes objects, making them first-class citizens in the Kubernetes ecosystem.

This means that VMs can be managed using Kubernetes-native tools and APIs, making them easier to automate and orchestrate.

KubeVirt also provides a virtualization API that allows for the creation of virtual devices and drivers, giving users the ability to configure and customize their VMs to suit their specific needs.

Also, KubeVirt integrates with popular virtualization technologies, such as QEMU and libvirt, making it possible to use existing VM images and templates with Kubernetes (This made our life easier to migrate from Ovirt).

Overall, KubeVirt provides a powerful and flexible solution for managing virtual workloads in Kubernetes, allowing for a more streamlined and efficient approach to managing hybrid workloads.

KubeVirt vs ????

KubeVirt provides several benefits over other products in the virtualization space. Here are some of the benefits of KubeVirt:

1. Seamless integration with Kubernetes: KubeVirt is designed to seamlessly integrate with Kubernetes, allowing virtual machines to be managed as Kubernetes objects. This means that users can leverage their existing Kubernetes skills and tools to manage virtual machines, making it easier to manage hybrid workloads.

2. Customizable virtualization: KubeVirt provides a virtualization API that allows for the creation of custom virtual devices and drivers. This means that users can customize their virtual machines to meet their specific needs, making it easier to run legacy workloads(ahm ahm ovirt) on modern infrastructure.

3. Open-source and community-driven: KubeVirt is an open-source project with an active community of contributors. This means that we can benefit from a wide range of features and integrations contributed by the community, as well as access to support and resources.

4. Flexibility: KubeVirt provides flexibility in terms of the virtualization technology used, as it can integrate with popular technologies such as QEMU and libvirt. This means that users can leverage existing virtual machine images and templates with Kubernetes, making it easier to migrate workloads to Kubernetes(VMs migration).

In summary, KubeVirt provides a powerful and flexible solution for managing virtual workloads in Kubernetes, with seamless integration, customized, open-source community support, flexibility in virtualization technology, and efficiency in resource utilization, making it a very strong competitor in the virtualization space.

It’s all in the Fog

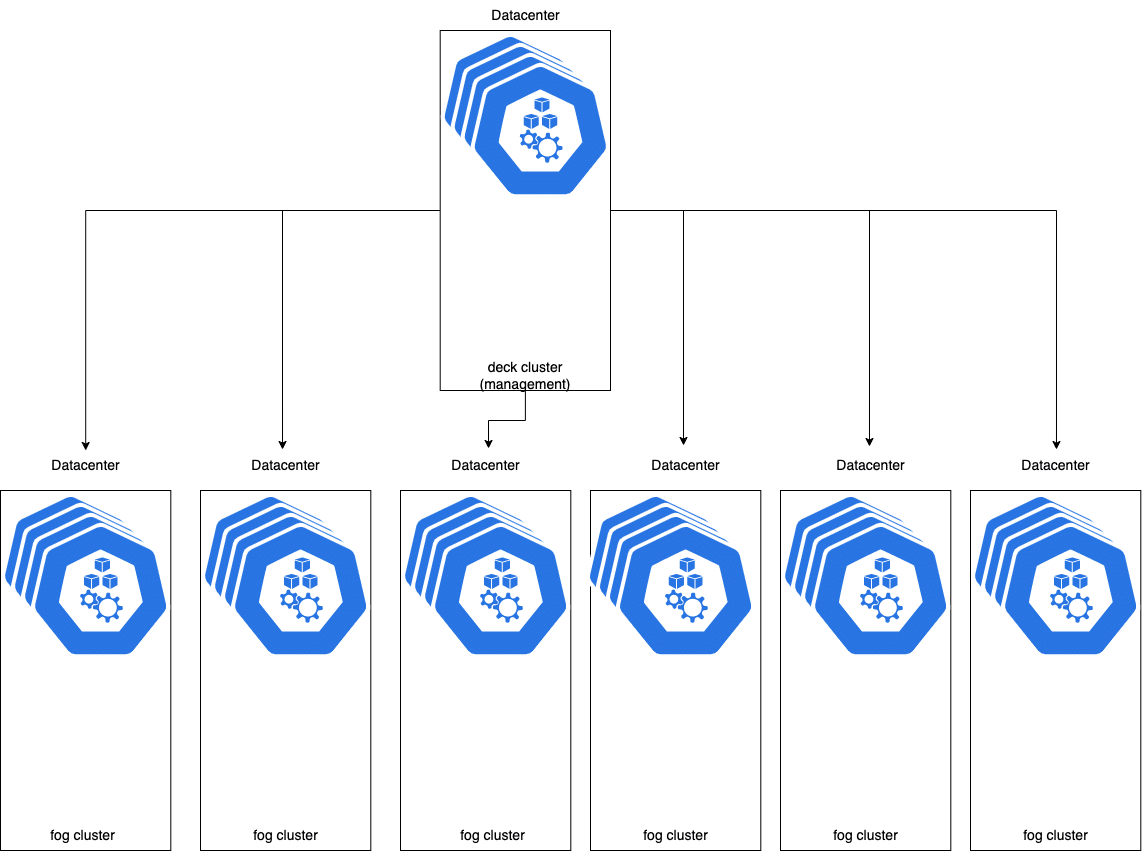

After deciding on the solution, what about the design? Let’s start from our needs:

- Multi-regional DCs

- Management cluster

- Easy maintenance

We have multi-regional data centers, which means the solution should be designed to work seamlessly across multiple geographic locations. This means deploying Kubevirt clusters in each region.

The management cluster should be used to manage multiple Kubevirt clusters located in different regions, providing a centralized control plane for the entire virtualization infrastructure.

The Kubevirt solution should be designed with maintenance in mind. This could involve using tools like Kubernetes operators to automate common maintenance tasks, or designing

the infrastructure in a way that allows for easy upgrades and updates without disrupting service availability.

Our General Stack

Won’t go into details here, as there are very good articles regarding our stack:

- Calico – A networking solution for Kubernetes that provides network policy enforcement, secure network communication, and network isolation. It uses BGP routing and can be integrated with other network plugins.

- Rook-Ceph – A storage solution for Kubernetes that uses the Ceph distributed storage system. It provides persistent storage for Kubernetes workloads and can be used to store block, file, and object data.

- Velero – A backup and restore solution for Kubernetes that can be used to protect and migrate Kubernetes workloads and resources. It can be used to create and restore backups of entire clusters or individual resources.

- VictoriaMetrics – A time-series database and monitoring solution for Kubernetes that can be used to collect, store, and analyze metrics and logs from Kubernetes workloads and resources. It supports a variety of data sources and can be used to create custom dashboards and alerts.

- CDK8s (Cloud Development Kit for Kubernetes) – an open-source software development framework that enables developers to define Kubernetes resources using familiar programming languages like TypeScript, Python, and Java.

- Argo Events is an open-source event-based system that allows you to trigger actions in response to specific events.

Fries and DNS Delegations

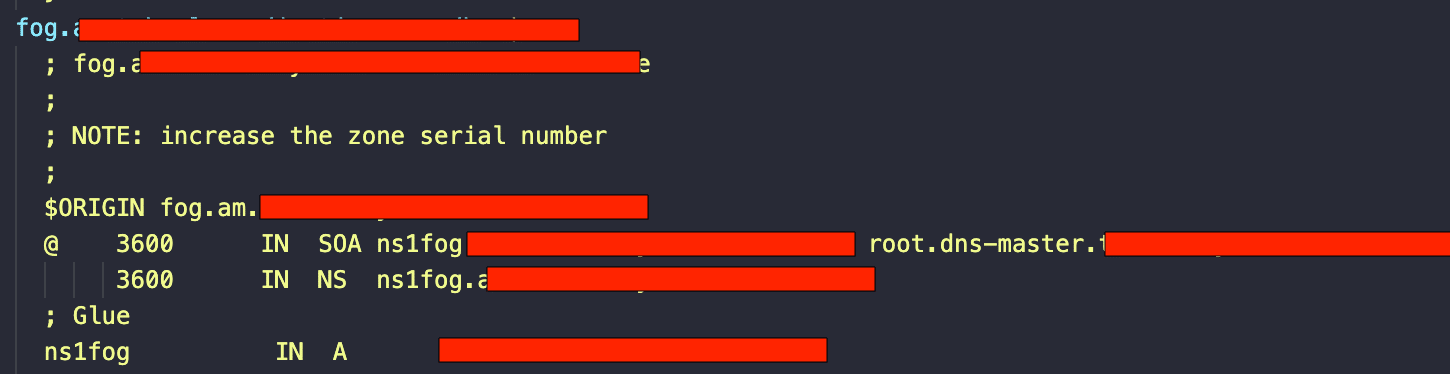

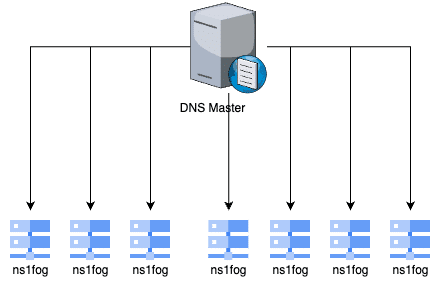

In our multi-region environment, the DNS architecture consists of a main DNS server and fog nameservers for each zone. The main DNS server handles different types of DNS queries like PTR, CNAME, and A records, while the fog nameservers are responsible for handling DNS queries for a particular zone.

Kubernetes uses kube-dns as its default DNS server. However, we’ve decided to use CoreDNS.

CoreDNS is a flexible and extensible DNS server that is designed to work well with Kubernetes. It supports various plugins and middleware that can be used to customize its behavior to suit your needs.

When a DNS query is made related to a Kubernetes cluster, the main DNS server delegates the query to the relevant fog nameserver that is responsible for handling DNS queries for that particular Kubernetes cluster. The fog nameserver then uses CoreDNS to resolve the service name to an IP address.

(Snippet from coredns config)

Managing DNS in a multi-region environment with Kubernetes and CoreDNS can be challenging, but it provides several advantages over traditional DNS architectures. By using CoreDNS as the DNS server in Kubernetes and delegating DNS queries to the appropriate fog nameserver, you can simplify your DNS architecture, improve reliability and availability,

and customize DNS behavior to suit your specific needs.

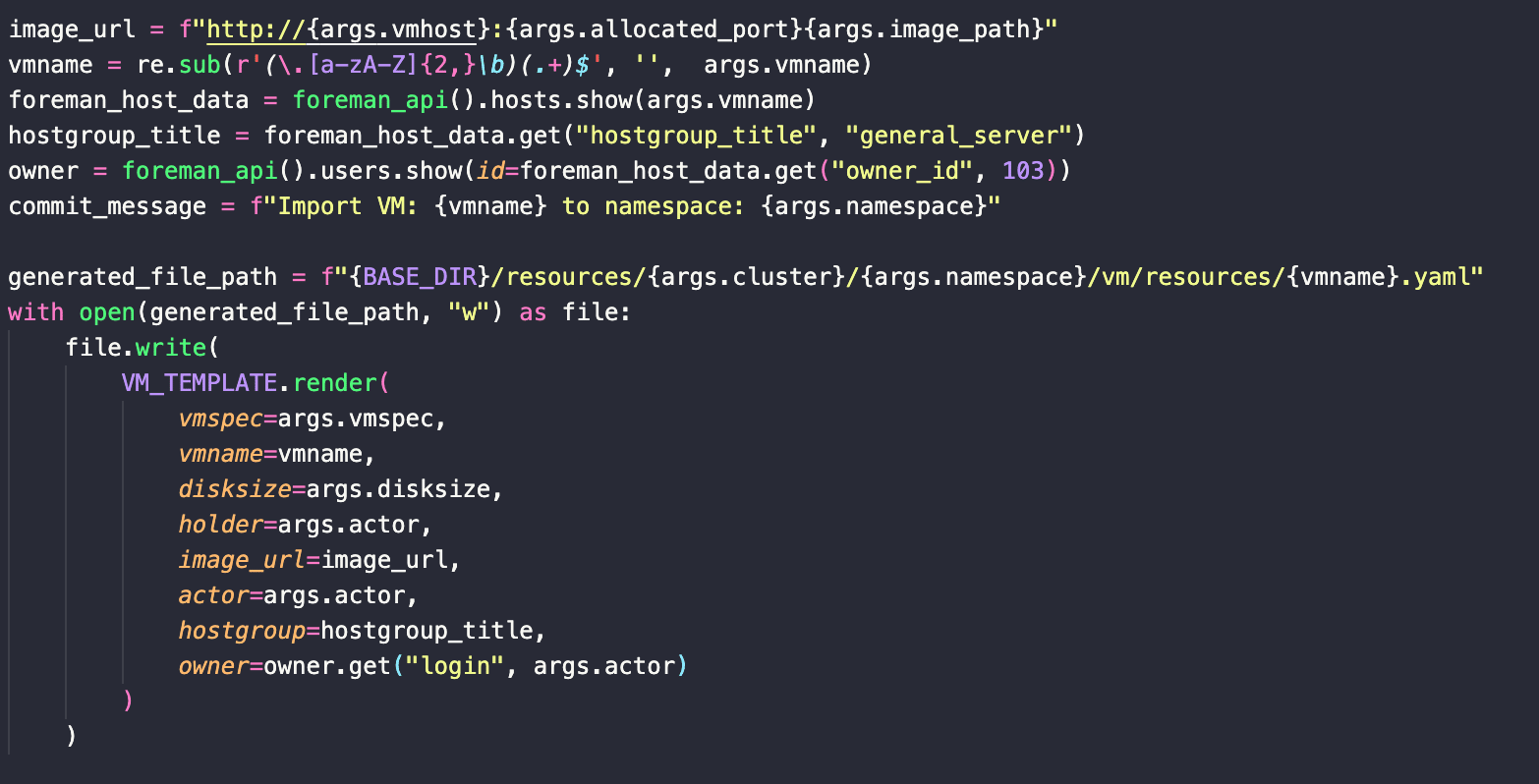

Fun, Games and Migrations

Migrating from oVirt to KubeVirt involves moving virtual machines from one platform to another. The migration process requires careful planning, as it involves evaluating the compatibility of the VMs with KubeVirt, and ensuring that the necessary tools and configurations are in place, and that Ovirt is a production system. In order to streamline the process, we’ve created a custom script that automates the migration of VMs from oVirt to KubeVirt. The script takes into

account our specific needs, such as integration with Foreman, templates and ensures a smooth and efficient migration process.

(Snippet from migration script)

Continuous Deployment? Challenge accepted

It’s all fun and games, but how do we manage this monster? ArgoCD to the rescue!

With the growing adoption of Kubernetes, many CD systems have emerged to support containerized workloads. Among these systems is ArgoCD, an open-source project that provides GitOps-based continuous delivery for Kubernetes.

ArgoCD offers several benefits over other CD systems, including:

- GitOps: ArgoCD uses a GitOps-based approach to manage Kubernetes resources, which means that all changes are committed to a Git repository and automatically deployed to the cluster. This approach provides a single source of truth for the entire infrastructure, making it easier to track changes, rollbacks, and security compliance.

- Declarative Configuration: ArgoCD allows users to define the desired state of the Kubernetes resources using YAML files, enabling declarative configuration management. This approach simplifies the management of complex Kubernetes environments, making it easier to manage configurations across multiple clusters.

- Multi-Cluster Support: ArgoCD provides a single control plane for managing multiple clusters, making it easier to deploy applications across multiple environments. This feature is particularly useful for organizations with a large number of clusters, as it simplifies the management of resources across multiple Kubernetes clusters.

- Advanced Rollback Capabilities: ArgoCD supports advanced rollback capabilities, enabling users to easily rollback to previous versions of the application or infrastructure. This feature is critical in ensuring high availability and minimizing downtime.

- Scalability: ArgoCD is highly scalable, making it ideal for managing large and complex Kubernetes environments. It is designed to work with Kubernetes-native tools like Helm, Kustomize, and Jsonnet, enabling users to customize the deployment process as needed.

At our organization, we use ArgoCD to manage our KubeVirt environment and infrastructure, which includes Calico, Rook-Ceph, Velero, Victoria Metrics, and CDK8s. With ArgoCD, we can manage all our Kubernetes resources from a single control plane, enabling us to deploy applications rapidly and at scale. We can also use ArgoCD’s declarative configuration management to simplify the management of complex Kubernetes environments, making it easier to manage configurations across multiple clusters.

In addition, ArgoCD’s advanced rollback capabilities and multi-cluster support ensure that we can maintain high availability and minimize downtime in the event of an issue. And with ArgoCD’s scalability, we can easily manage our growing infrastructure without compromising performance.

In conclusion, ArgoCD provides several benefits over other CD systems, making it an ideal choice for managing our multi region environments and infrastructure. Its GitOps-based approach, declarative configuration management, multi-cluster support, advanced rollback capabilities, and scalability make it a powerful tool for deploying applications rapidly and at scale.

(Source)

Events? What events?

If you were reading closely, we are using foreman as our inventory management.

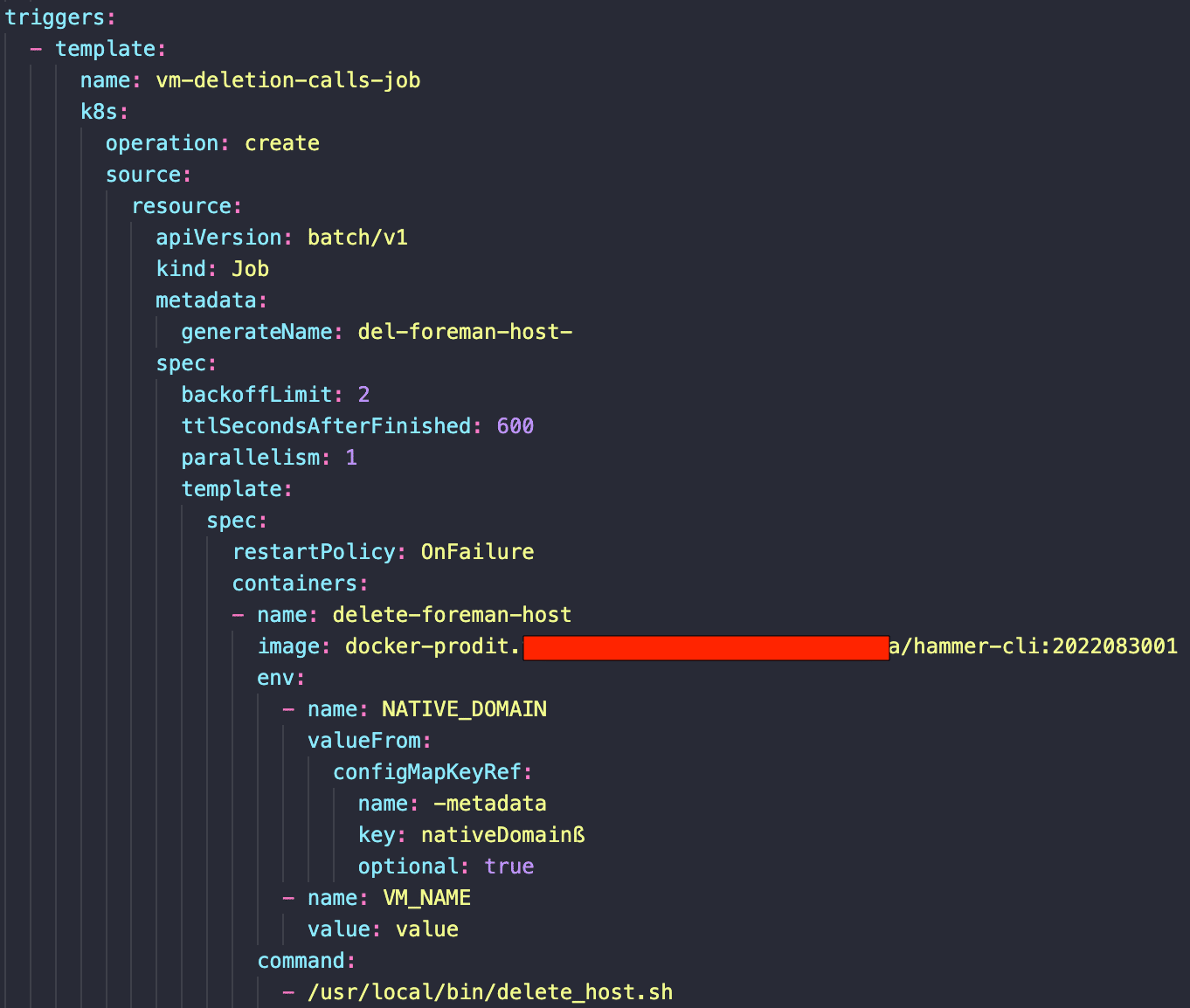

Argo Events is an open-source event-based system that allows you to trigger actions in response to specific events. It enables you to easily define, route, and filter events using a declarative syntax. With Argo Events, you can create event-driven workflows that can perform various tasks, such as running jobs, deploying applications, or sending notifications. Our use case of Argo Events is deleting foreman objects.We are using sensors to listen to events and automatically delete Foreman objects when a specific event occurs. For example, when a host is deleted, Argo Events can trigger a workflow to delete the corresponding Foreman object, which helps to keep the Foreman inventory up-to-date and clean. Overall, Argo Events provides a powerful and flexible way to automate tasks and workflows based on events, which can greatly improve efficiency and reduce manual effort.

(Snippet from argo events hook)

Friends and templates

Customizing Kubernetes objects is a crucial task in managing Kubernetes infrastructure.

Kustomize has been a popular tool for this purpose, but it has its limitations. In particular, as projects grow in complexity, the resulting YAML files can become unmanageably large and difficult to work with. This can lead to mistakes and inconsistencies in the deployment process.

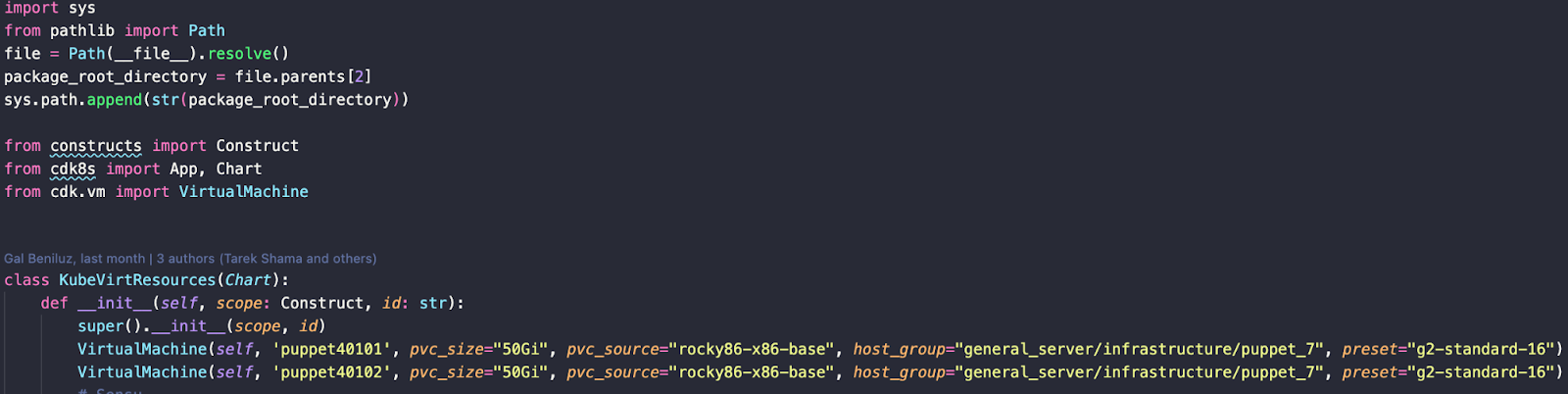

To address this issue, many teams have turned to cdk8s as an alternative to Kustomize. cdk8s is a framework that allows us to define Kubernetes resources using familiar programming languages like Python for objects using a more flexible and modular approach. It allows for the creation of smaller, more manageable YAML files that are easier to read and edit.

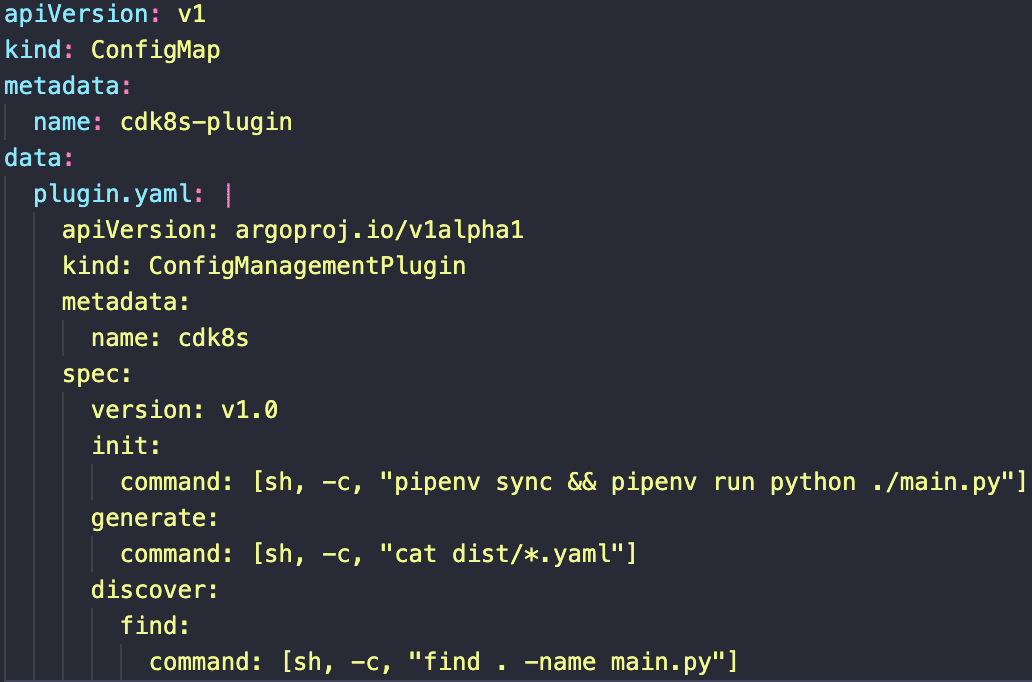

While cdk8s offers many benefits, one downside is that it is not officially supported by ArgoCD, to overcome this limitation, we’ve created a custom docker image to ensure compatibility between cdk8s and ArgoCD.

(snippet from cdk8s configmap)

By doing so, we can continue to benefit from the advantages of cdk8s while also ensuring that our deployment process is reliable and consistent.

Now, adding VM’s is easy as 1-2-3:

(snippet from vm’s creation with python cdk8s)

This is it for now,

Wanted to thank my awesome team, that without them it wouldn’t be possible, Alex Bulatov Gal Beniluz Tarek Shama Maher Odeh.