A joint post with Ofri Mann

We went to ICLR to present our work on debugging ML models using uncertainty and attention. Between cocktail parties and jazz shows in the wonderful New Orleans (can we do all conferences in NOLA please?) we also saw a lot of interesting talks and posters. Below are our main takeaways from the conference.

Main themes

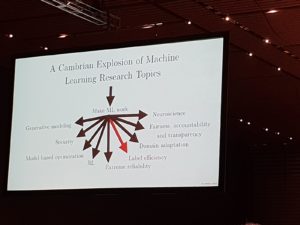

A good summary of the themes was in Ian Goodfellow’s talk, in which he said that until around 2013 the ML community was focused on making ML work. Now that it’s working on many different applications given enough data, the focus has shifted towards adding more capabilities to our models: we want them to comply to some fairness, accountability and transparency constraints, to be robust, use labels efficiently, adapt to different domains and so on.

A slide on ML topics, from Ian Goodfellow’s talk

We noticed a few topics that got a lot of attention: Reinforcement Learning, GANs and adversarial examples, and Fairness were the most prominent ones. There was also a fair amount of work on training and optimization techniques, VAEs, quantization and understanding different behaviors of networks.

While the last day of the conference displayed many papers about different NLP tasks, Computer Vision felt to us like a solved problem and there were very few applicative vision papers. However, most of the work on optimization, understanding networks, meta learning etc is in fact done on images. Even though the conference focuses on learning representations, there wasn’t much work on learning representations for structured or tabular data.

The notes below are grouped according to the main topics.

Reinforcement Learning

There were three workshops and an entire poster session (~90 papers) dedicated to Reinforcement Learning.

One of the prominent subjects discussed this year was sparse-reward or no-reward reinforcement learning. The subject was well represented with the Task-Agnostic Reinforcement Learning workshop, multiple posters and short talks, as well as a great talk by Pierre-Yves Oudeyer, discussing curiosity-driven learning, where information-gain is used as an intrinsic reward, allowing algorithms to explore their environments and focus on areas where information-gain is highest. These kinds of intrinsic rewards can later be combined with extrinsic ones to achieve tasks with almost no explicit practice. In his talk, Oudeyer demonstrated how robots learn to manipulate their environment and perform tasks with no prior practice, as well as a novel math-teaching method, where a curiosity-driven algorithm is used to personalize each child’s curriculum, focusing on his/her weaker subjects.

Two other workshops dealt with structure in RL:

The Structure & Priors in Reinforcement Learning workshop focused on different ways to learn structure and introduce priors to RL tasks in order to allow them to learn from fewer examples and have a higher generalization power at a lower computational cost.

The Deep Reinforcement Learning Meets Structured Prediction workshop suggested viewing structure prediction as a sequential decision making process and focused on papers that leverage the advances in deep RL to improve structured prediction.

Generative Adversarial Models and Adversarial Examples

Besides Goodfellow’s keynote talk, which focused on GAN’s applications to different ML research areas, there was an entire poster session dedicated to GANs and adversarial examples. These also got a lot of attention in the Debugging ML models workshop, the Deep Generative Models for Highly Structured Data workshop and in the Safe Machine Learning: Specification, Robustness, and Assurance workshop.

For example, in his talk “A new Perspective on Adversarial Examples”, Aleksander Madry claimed that models learn from robust features, which capture some interpretable meaning to humans, and non-robust features that are correlative with the labels. Since the non-robust features are just as good in order to maximize model’s accuracy, models focus on them as well. Therefore, he claims, we cannot hope for post-training interpretability, since some of the model’s predictive power comes from the non-robust features. We call those features adversarial examples, but from the model’s perspective, there isn’t a real difference between the robust and non-robust features. In order to improve interpretability Madry presented ways to force models to focus only on robust features during training.

Fairness, Accountability, Safety and AI for Social Good

This year there was a lot of (not necessarily technical) talk about issues related to fairness, safety and AI for Social Good.

Cynthia Dwark gave a great keynote about recent development in algorithmic fairness in which she presented two notions of fairness – group fairness, which address the relative treatment of different demographic groups, and individual fairness, which requires that people who are similar, with respect to a given classification task, should be treated similarly by classifiers for that task. She talked about the challenges related to the two notions and about recent work aiming at bridging the gap between them, with an emphasis on the notion of multi calibration – being calibrated on different groups, and fair ranking and scoring.

Another talk that aimed at sparking discussion about possible dangers in ML was Zeynep Tufekci’s “While we’re all worried about the failures of ML, what dangers lurk if it (mostly) works?”.

The AI for social good workshop focused on applying ML to solve problems important for society such as health care, education and agriculture. The Safe ML workshop, focused on specifying systems purpose, making them more robust and monitor them. The reproducibility in ML workshop and the Debugging ML workshop (where we presented our work on uncertanity and attention), mainly focused on fairness and interpretability.

We read the high emphasis on Fairness, Accountability and Transparency (FAT) by the conference organizers as an open invitation to the community to participate in this important discussion as part of the main ML conferences. While most related papers are published in designated conferences like FAT conference and there weren’t many related papers in the main track this year, we expect this to change in upcoming years.

Other things we liked

There were many other interesting papers on topics outside the themes mentioned above. It’s hard to choose just a few out of the 500+ papers published in ICLR 19.

We compiled a few for some topics we are interested in.

Transfer Learning

A research group from the University of Illinois presented a very interesting paper, “Knowledge Flow – Improve upon your teachers“. The idea is a novel method of transfer learning – “Knowledge Flow” architecture.

A “student” network, training on a novel task, uses the intermediate layer weights from one or more “teacher” networks, pre-trained on other tasks. By using trainable transformation matrices and weight vectors, the model learns how much each teacher contributes to the students in different parts of the network.

In addition, the Knowledge Flow loss function includes the student’s dependency on its teachers, which decreases as training progresses.

Using this method, the teachers contribute heavily to the initial training steps, allowing for a quick “warm start” of the student network. As the student trains on the novel task, the teacher’s influence is diminished, until the final training steps, when the student becomes independent of its teachers, and uses only its own weight.

This works on both supervised and reinforcement-learning tasks, and achieves top results and faster convergence on several test-sets.

Another transfer learning paper we found interesting was K for the price of 1: Parameter efficient multi task and transfer learning.

It shows a unified framework for both transfer and multitask learning. The idea is to use model patches – a set of small trainable layers specific to each task that are interleaved with existing layers.

These patches can be used for transfer learning by fine-tuning only the patch parameters on the new task while making the rest of the pretrained layers unchanged. For multitask learning it means that the models share most of the weights except for the task specific patches.

Uncertainty (Our favourite subject!)

Bias-reduced uncertainty estimation for Deep Neural Networks, shows an “overfitting”-like phenomena with uncertainty estimations where on easier cases uncertainty estimation improve at the beginning of the training but after a number of iterations they degrade. In order to prevent this, averaging different checkpoints from training or early stopping is recommended.

Modeling Uncertainty with Hedge Instance Embedding: What if instead of learning deterministic embeddings we’ll learn probabilistic ones? Siamese networks are trained with constructive loss but instead of outputting z as usual, the output is expectation and standard deviation, defining the gaussian that represent the embedding. The size of the gaussian can later be treated as an uncertainty estimation.

Why Batch Normalization Work?

There we three papers worth mentioning shedding light into how batch normalization works: Towards Understanding, Regularization in Batch Normalization , A Mean Field Theory of Batch Normalization , and Theoretical Analysis of Auto Rate-Tuning by Batch Normalization

Some thoughts on attendance

The audience was a mix of academia and industry labs. A huge amount of papers came from industry research centers – DeepMind had around 50 papers, Google around 55, Microsoft and Facebook around 30 each. There was also a fair amount of work coming from industry companies.

We had a lot of fun and came back with many ideas we can’t wait to develop!